Building Performant Voice Agents in India

Complete guide to building and running high performance voice agents in India.

Last Updated:

India is one of the world's fastest growing regions for voice agents. With LiveKit Cloud's regional agent deployment in Mumbai, you can deploy your entire voice stack in India to minimize latency.

Key takeaway: co-locate your AI models near your voice agent

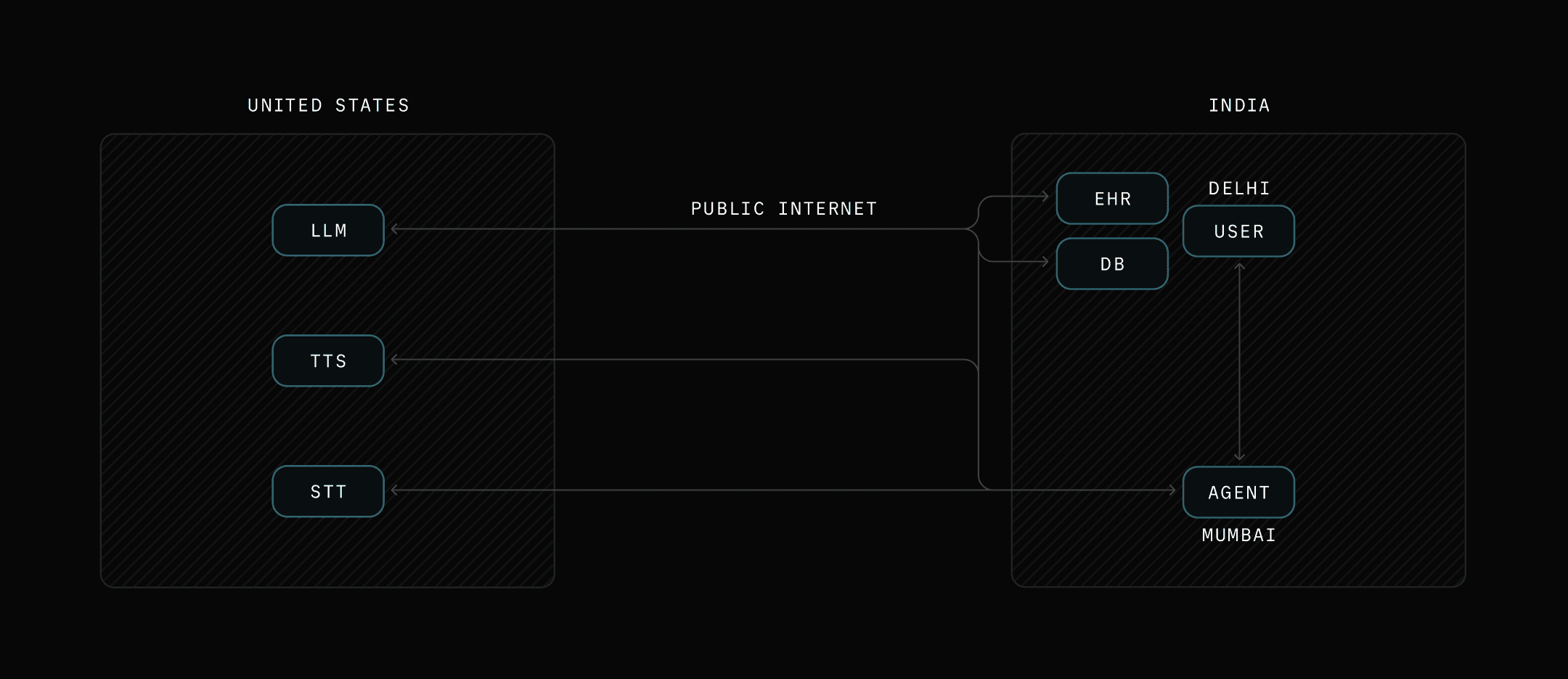

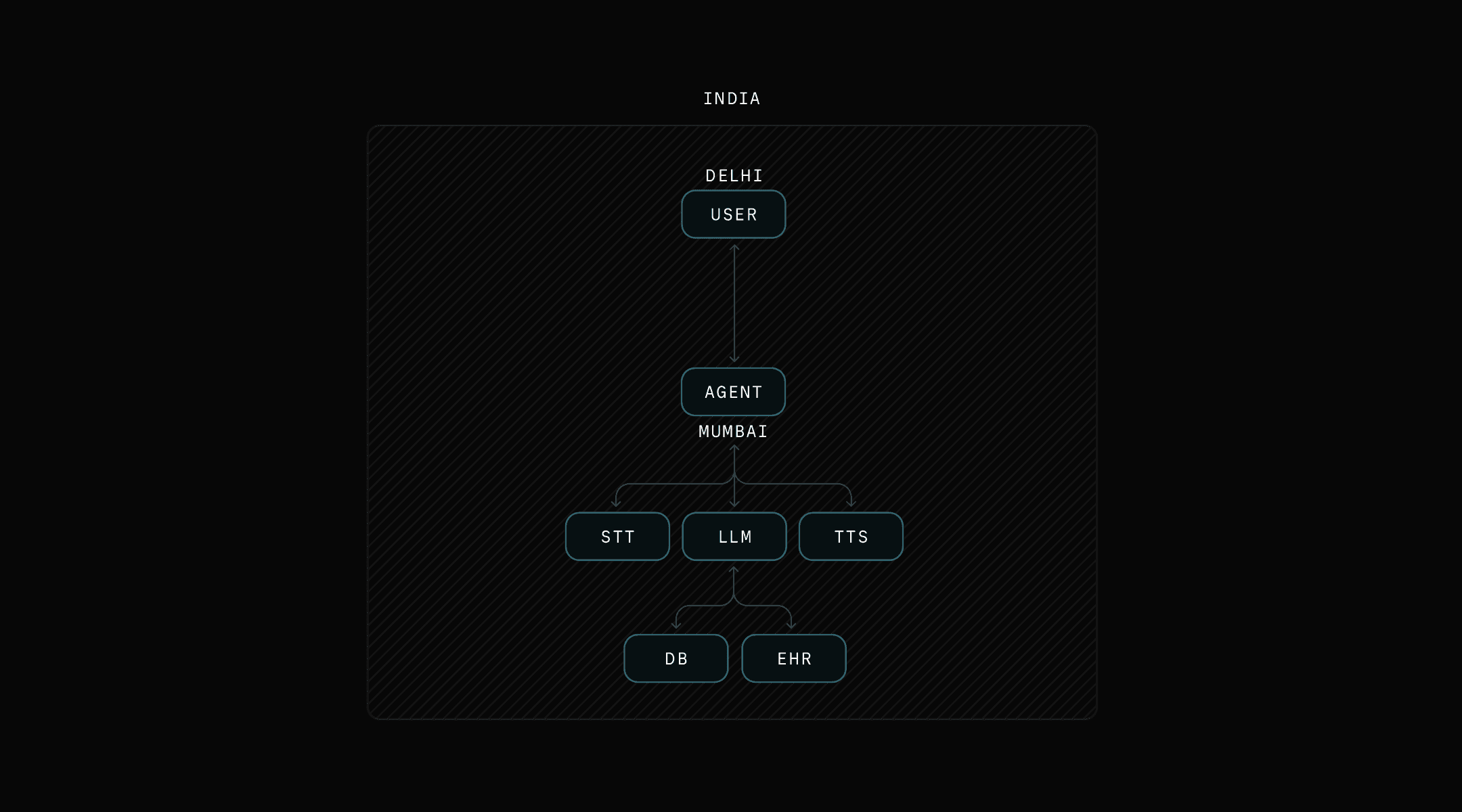

The key to building fast voice agents on LiveKit is to host your agents in a region close to your entire AI model stack, including your STT (speech-to-text model), LLM (large language model), and TTS (text-to-speech model).

There's two factors that affect the speed of your voice agent: User ↔ Agent latency and Agent ↔ Model latency.

User ↔ Agent latency:

- The audio packet between the user and the agent can run relatively uninterrupted over long distances on LiveKit's optimized private network.

- This means it's okay to have users far away from where your agent is as long as your agent is close to your model stack.

Agent ↔ Model latency:

- Every call from the agent to the AI models is sequential (e.g. agent to LLM only runs after agent to STT finishes).

- This includes any intermediary function calls your LLM might be making, such as to your database.

- Because this path involves more steps and each call is dependent on the previous one, it's important that each individual agent-to-model call is done over the shortest possible path. The best way to do this is geographically co-locating each AI model with your agent.

Voice agent latency comparison by architecture

Let's use a example where a user and agent are both located in India, but the AI models are all located in the United States. Since the agents and models are not co-located, most requests are traveling between cloud regions, adding latency with each hop.

When models and the agent are co-located, most requests are short with the long user to agent transport running on LiveKit's private network.

Building an India-based voice AI stack

Model selection for Indian languages

Because of the large number of regional dialects across India, it's important to choose STT and TTS models that have broad language support. Here's what's currently available through LiveKit Inference and LiveKit Agents plugins:

| AI Model Type | Model Providers | Indian Language Support |

|---|---|---|

| STT | Deepgram Nova-3 (via LiveKit Inference, hosted in India) | Hindi and 7 other languages. Auto-routes to India regional deployment. |

| STT | Cartesia Ink Whisper (via LiveKit Inference) | 100+ languages, including Hindi |

| STT | Sarvam AI (via LiveKit Agents plugin) | Hindi, Tamil, Telugu, Kannada, Malayalam, Bengali, Marathi, Gujarati, Punjabi and more |

| TTS | Cartesia (via LiveKit Inference) | Multilingual, including Hindi |

| TTS | ElevenLabs (via LiveKit Inference) | 29+ languages, including Hindi |

| TTS | Rime (via LiveKit Inference) | Multilingual, including Hindi |

| TTS | Sarvam AI (via LiveKit Agents plugin) | 11 Indian languages, including Hindi, Tamil, Telugu, Bengali, Marathi, Gujarati, Kannada, Malayalam, Punjabi, Odia |

| LLM | Azure OpenAI (India) / Vertex Gemini (asia-south1) (via LiveKit plugins) | 50+ languages, including Hindi and other Indic languages |

If you want to minimize latency to serve users within India, here's an example stack we would recommend:

| Layer | Provider | Notes |

|---|---|---|

| Agent hosting | LiveKit Cloud Mumbai (ap-south) | Deploy agents in LiveKit India's ap-south region. |

| STT | Deepgram (regional deployment via LiveKit Inference) | LiveKit-hosted Deepgram instance in India. |

| LLM | Azure OpenAI (India) or Vertex Gemini (asia-south1) | Use Azure's or Google's India cloud regions. |

| TTS | Cartesia (via LiveKit Inference) | Routed to Cartesia's India region through LiveKit. Failovers in-region not guaranteed. |

When and how to host voice agents in India

With LiveKit's co-located hosted Deepgram STT and agent deployment region in Mumbai (ap-south), you can now host both your entire model stack and agent in India.

To optimize for the lowest latency experience, the best option is to host your agent and call AI models within the same region. However, if you need specific models only available in other regions (e.g., latest GPT, specialized TTS voices), it's best to host your agent in the region nearest your models rather than your end users.

Example performance: An agent hosted in LiveKit Cloud Mumbai (ap-south) using GPT-4o, Cartesia TTS, and co-located Deepgram STT achieves ~1.67s end-to-end latency, ~1s faster than with an equivalent model stack hosted in a different region.

Telephony for voice agents in India

For voice agents handling calls in India, we recommend using a regional SIP trunk provider. Using a SIP trunk has a few advantages:

- Local phone numbers: Indian users are far more likely to pick up a call from a local number (+91) than an international one. Local numbers also enable features like DND registry compliance.

- Better call quality: Calls that originate and terminate within India avoid international hops, reducing jitter and packet loss.

- TRAI Regulation: Indian telecom regulations (TRAI) have specific requirements around number provisioning, call handling, and caller ID that using a SIP trunk helps with. All telephony calls are subject to further Department of Telecommunications (DoT) data residency requirements.

LiveKit works with most SIP trunk providers. Here's how to get started:

- Get phone numbers from a local SIP provider (Plivo, Twilio, Exotel).

- Configure your SIP trunk to point to LiveKit's India SIP endpoint.

- Enable region pinning by contacting LiveKit. Region pinning helps to keep inbound and outbound calls stay within the India region.

- Deploy your agent in LiveKit Cloud's Mumbai (ap-south) region.

Additional considerations for LiveKit voice agents in India

- Fallback Availability: LiveKit offers co-located Deepgram STT models in Mumbai, India that falls back to Deepgram's Frankfurt, Germany region if unavailable.

- Language Support: LiveKit's co-located Deepgram STT models currently only supports English/Hindi for nova-2-general and English for flux-general. More details are available here.

- Questions: If you have any questions about optimal setup or compliance requirements, reach out here or in our community. Regulatory and data residency requirements vary by customer and use case.

- Getting Started: Check out the LiveKit Agents quickstart to get your first agent running, then adapt with the India stack config above.